TL;DR

- The MVP does not need to be perfect.

- The MVP does not need to be perfect.

- The MVP does not need to be perfect (deliberate duplication).

- The MVP does not need to be perfect, but it does need to be completed on time.

- Prioritise the work where we have the least confidence in our estimates.

- Prioritise making the MVP function over making the MVP better, stable, clean etc.

- Prioritise getting to a point where we can test the interaction of large components rather than fleshing out internal testing on individual ones.

- Prioritise getting to a point where we can fully test product use cases and get customer feedback.

The approach described here isn’t just a theoretical one but is borne out by cumulatively decades of experience with MVP product development. An MVP can refer to an entirely new product or simply a new feature idea in an existing product.

If we’re not dealing with an MVP then the approach here is possibly no longer optimal. Recognising the right approach, depending on the maturity of the project, creates an enormous competitive advantage.

Scenario

For an MVP the following should be accepted as true:

- We have a deadline to release something by X date.

- We will fail if we don’t release a coherent product by that time. We fail because surrounding any significant MVP launch there are many other moving parts related to sales, marketing, resource allocations etc.

- We’ll only have a small number of customers on release, perhaps just one.

- It’s still possible that we’ll decide not to proceed with the MVP before or after release

- This is SaaS not a shuttle launch – it doesn’t have to be bug-free first time

Taking these points as axiomatic (self evident), the following are also true:

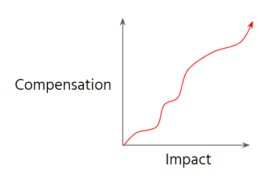

- Releasing a perfect MVP is not a requirement. Perfect is commonly the enemy of done. If you don’t feel at least a little discomfort when releasing an MVP you’ve spent too long on it. Here’s a great article from one of the LinkedIn founders about ‘waiting too long’ – https://www.linkedin.com/pulse/arent-any-typos-essay-we-launched-too-late-reid-hoffman/

- Non-functional requirements around performance/scalability are much less important.

In summary, it can be slow, buggy, fall over and be tough to use, but as long as it does what we’d expected then we’re good.

Risks

I’d summarise the key risks from our company’s (Echobox) perspective in order as follows. Feel free to disagree:

Top priority risk: Our biggest risk is that we decide that constructing an MVP is not worth doing and decide to cancel the project altogether. Minimising this risk amounts to minimising the developer time spent before making this decision.

High priority risk: The next risk is that we want to deliver an MVP but fail to do so by the deadline. This also amounts to failure, but basically from being too late to market. We are typically prepared to scrap any amount of nice-to-have functionality to ensure that we meet the deadline. The Product team should be scoping down the MVP to the minimum feature set, but if you identify any opportunities to reduce scope or complexity as you go along – let them know!

Medium priority risk: Next is that we release something that we want on time and it’s buggy, and doesn’t work to the point where we can’t manually concierge (i.e. get a human to do it behind a ‘curtain’) around it not working in the short term. This is close to failure, but we can bow and scrape and recover from it and fix bugs in the days immediately after release. A strong element of fake it until we make it is fine.

Low priority risk: The final risk is that we release something and it’s buggy so maybe customers don’t get a perfect impression due to teething issues, but otherwise it’s fine.

Risk Management

Assuming you agree with this summary, my reading of the above is that we should:

Prioritise doing work that is likely to uncover extra work. Or, rephrased, prioritise doing work that makes our remaining time estimate more certain.

Basically, we’ve planned out product tickets and tech tickets as best we can, but there will be work that needs to be done that we haven’t planned. There will be:

- Extra work we could’ve planned for but missed

- Extra work we couldn’t have foreseen initially

In addition, we’ll lose available development time from:

- Planned work overrunning

- Loss of working capacity for external reasons, e.g. one of us gets sick.

The squad lead will be giving estimates regularly, but frankly these shouldn’t be relied upon in terms of changing focus or approach. It’s safer to assume it’s wrong by 2 months and deliver sooner than to assume it’s correct.

Derisking

We mitigate the highest priority risks by discovering new work.

Once we have the clearest possible picture of the work to be done, we can make an informed decision about whether to continue with the MVP or not. The earlier that information comes in, the less of a risk and sunk cost it is to cancel the product.

Once we have the clearest possible picture of the development effort involved, we can decide what features to sacrifice to ensure we meet our next risk – meeting the deadline. We may still have to remove specced features at this stage.

Once we’ve got all of our features decided, we can more effectively decide what level of bug elimination and testing we are able to deliver in the remaining time available.

Discovering new work

New, unplanned-for work that extends our estimated completion date will exist in areas where:

- We’re inexperienced with technologies we’re using

- We’re trying to do something complicated

- We’re doing something the company hasn’t done before

As we begin to do tasks in these categories we’ll get a clearer picture of how much effort they’ll take. These types of tasks are where the uncertainty lies – we could think they’re simple and then find they balloon into weeks of extra work. More often than not our estimates will be severe underestimates.

Minimising these types of tasks is part of the technical planning for this MVP, but they will obviously still exist.

The key to minimising the risk with these tasks is to start them early. We should prioritise pushing back the frontiers on unfamiliar work rather than perfecting the work that we are more comfortable with.

Do the required work we’re least comfortable with.

Example

Suppose you’re building a product, and you plan to build 5 components to complete this product. You estimate each component as taking a month, and coincidentally you have 5 months to complete the work. In this situation, spending your first month starting and finishing to completion the first component is a huge mistake, because what you don’t realise until you start the 4th component is that you actually need a 6th component, and because you discovered this late then you now have no time to revise your plans, and you’re screwed.

A better approach is to start working on the tasks you’re least sure of, as by working on them you shine a light on the uncertainties.

Knowns/Unknowns

Some areas of the planned development for MVPs are well understood and others less so.

Here are some of the well understood areas:

- Making a simple mongo database

- Making a Java vertx webservice that acts on the database through Morphia

- Making a react frontend that makes calls to the webservice and provides simple user-facing functionality

- Implementing basic testing for all of the above

- Adding and reading files from S3

- Provisioning simple AWS services in CDK

All of the above are the bread and butter of Echobox, and there are solid examples of all this in more established repositories. This is the bulk of the work to be done, and it’s generally easy to plan technically, not risky and very unlikely to cause our expected development time to balloon.

Sure, it might not be “easy” work, and the specifics of it aren’t trivial, and it takes time to do it well and right, but it’s the same core work to support a product as 10,000 other software companies have done – it’s low risk. We’ll get this stuff to done in expected time periods without issue, and it’ll eventually be bug-free.

Less well-understood areas of an MVP can balloon considerably from their initial estimates, or turn out to be unworkable in a fundamental way that scuppers things.

This same MVP perspective should also apply to completely brand new features in an existing product. i.e. where we don’t know if the feature will continue in the product after its initial release.

Caveat: GDPR and Security

At the top I said that this is SaaS and not a shuttle launch, so not everything has to be perfect, but actually there are two areas where this does not apply at Echobox: GDPR and Security (which obviously overlap). Whilst we can play fast and loose (relatively speaking) with the rest of the functionality, GDPR and Security are the two places that I can see where we do need the product to be correct from the beginning, in order to meet legal requirements. As a result, testing these elements might warrant more focus than testing other aspects of the system. It’s also why we have created various reusable security SDKs which should effectively be used as plug and play in MVPs.

Thanks for reading my first techfood blog!